Originally published on the Just Eat Takeaway Engineering Blog.

We have presented our modular iOS architecture in a previous article and I gave a talk at Swift Heroes 2020 about it. In this article, we’ll analyse the challenges we faced to have the modular architecture integrated with our CI pipelines and the reasoning behind migrating to a monorepo.

The Problem

Having several modules in separate repositories brings forward 2 main problems:

- Each module is versioned independently from the consuming app

- Each change involves at least 2 pull requests: 1 for the module and 1 for the integration in the app

While the above was acceptable in a world where we had 2 different codebases, it soon became unnecessarily convoluted after we migrated to a new, global codebase. New module versions are implemented with the ultimate goal of being adopted by the only global codebase in use, making us realise we could simplify the change process.

The monorepo approach has been discussed at length by the community for a few years now. Many talking points have come out of these conversations, even leading to an interesting story as told by Uber. In short, it entails putting all the code owned by the team in a single repository, precisely solving the 2 problems stated above.

Monorepo structure

The main advantage of a monorepo is a streamlined PR process that doesn’t require us to raise multiple PRs, de facto reducing the number of pull requests to one.

It also simplifies the versioning, allowing module and app code (ultimately shipped together) to be aligned using the same versioning.

The first step towards a monorepo was to move the content of the repositories of the modules to the main app repo (we’ll call it “monorepo” from now on). Since we rely on CocoaPods, the modules would be consumed as development pods.

Here’s a brief summary of the steps used to migrate a module to the monorepo:

- Inform the relevant teams about the upcoming migration

- Make sure there are no open PRs in the module repo

- Make the repository read-only and archive it

- Copy the module to the Modules folder of the monorepo (it’s possible to merge 2 repositories to keep the history but we felt we wanted to keep the process simple, the old history is still available in the old repo anyway)

- Delete the module

.gitfolder (or it would cause a git submodule) - Remove

GemfileandGemfile.lockfastlanefolder,.gitignorefile,sonar-project.properties,.swiftlint.ymlso to use those in the monorepo - Update the monorepo’s

CODEOWNERSfile with the module codeowners - Remove the

.githubfolder - Modify the app

Podfileto point to the module as a dev pod and install it - Make sure all the modules’ demo apps in the monorepo refer to the new module as a dev pod (if they depend on it at all). The same applies to the module under migration.

- Delete the CI jobs related to the module

- Leave the podspecs in the private Specs repo (might be needed to build old versions of the app)

The above assumes that CI is configured in a way that preserves the same integration steps upon a module change. We’ll discuss them later in this article.

Not all the modules could be migrated to the monorepo, due to the fact the second-level dependencies need to live in separate repositories in order to be referenced in the podspec of a development pod. If not done correctly, CocoaPods will not be able to install them. We considered moving these dependencies to the monorepo whilst maintaining separate versioning, however, the main problem with this approach is that the version tags might conflict with the ones of the app. Even though CocoaPods supports tags that don’t respect semantic versioning (for example prepending the tag with the name of the module), violating it just didn’t feel right.

EDIT: we’ve learned that it’s possible to move such dependencies to the monorepo. This is done not by defining :path=> in the podspecs but instead by doing so in the Podfile of the main app, which is all Cocoapods needs to work out the location of the dependency on disk.

Swift Package Manager considerations

We investigated the possibility of migrating from CocoaPods to Apple’s Swift Package Manager. Unfortunately, when it comes to handling the equivalent of development pods, Swift Package Manager really falls down for us. It turns out that Swift Package Manager only supports one package per repo, which is frustrating because the process of working with editable packages is surprisingly powerful and transparent.

Version pinning rules

While development pods don’t need to be versioned, other modules still need to. This is either because of their open-source nature or because they are second-level dependencies (referenced in other modules’ podspecs).

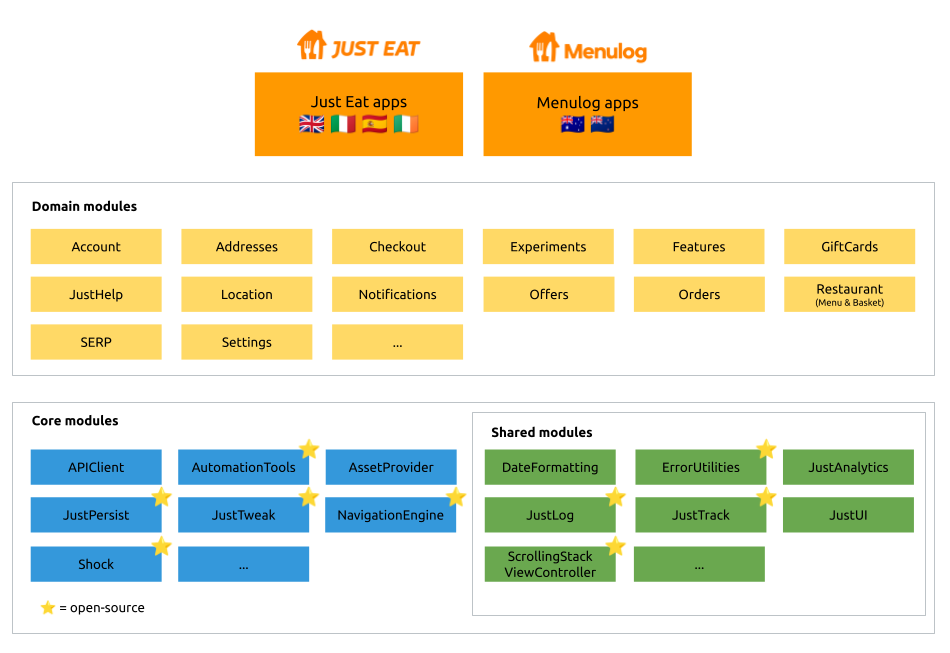

Here’s a revised overview of the current modular architecture in 2021.

We categorised our pods to better clarify what rules should apply when it comes to version pinning both in the Podfiles and in the podspecs.

Open-Source pods

Our open-source repositories on github.com/justeat are only used by the app.

- Examples: JustTweak, AutomationTools, Shock

- Pinning in other modules’ podspec: NOT APPLICABLE open-source pods don’t appear in any podspec, those that do are called ‘open-source shared’

- Pinning in other modules’ Podfile (demo apps): PIN (e.g. AutomationTools in Orders demo app’s Podfile)

- Pinning in main app’s Podfile: PIN (e.g. AutomationTools)

Open-Source shared pods

The Just Eat pods we put open-source on github.com/justeat and are used by modules and apps.

- Examples: JustTrack, JustLog, ScrollingStackViewController, ErrorUtilities

- Pinning in other modules’ podspec: PIN w/ optimistic operator (e.g. JustTrack in Orders)

- Pinning in other modules’ Podfile (demo apps): PIN (e.g. JustTrack in Orders demo app’s Podfile)

- Pinning in main app’s Podfile: DON’T LIST latest compatible version is picked by CocoaPods (e.g. JustTrack). LIST & PIN if the pod is explicitly used in the app too, so we don’t magically inherit it.

Internal Domain pods

Domain modules (yellow).

- Examples: Orders, SERP, etc.

- Pinning in other modules’ podspec: NOT APPLICABLE domain pods don’t appear in other pods’ podspecs (domain modules don’t depend on other domain modules)

- Pinning in other modules’ Podfile (demo apps): PIN only if the pod is used in the app code, rarely the case (e.g. Account in Orders demo app’s Podfile)

- Pinning in main app’s Podfile: PIN (e.g. Orders)

Internal Core pods

Core modules (blue) minus those open-source.

- Examples: APIClient, AssetProvider

- Pinning in other modules’ podspec: NOT APPLICABLE core pods don’t appear in other pods’ podspecs (core modules are only used in the app(s))

- Pinning in other modules’ Podfile (demo apps): PIN only if pod is used in the app code (e.g. APIClient in Orders demo app’s Podfile)

- Pinning in main app’s Podfile: PIN (e.g. NavigationEngine)

Internal shared pods

Shared modules (green) minus those open-source.

- Examples: JustUI, JustAnalytics

- Pinning in other modules’ podspec: DON’T PIN (e.g. JustUI in Orders podspec)

- Pinning in other modules’ Podfile (demo apps): PIN (e.g. JustUI in Orders demo app’s Podfile)

- Pinning in main app’s Podfile: PIN (e.g. JustUI)

External shared pods

Any non-Just Eat pod used by any internal or open-source pod.

- Examples: Usabilla, SDWebImage

- Pinning in other modules’ podspec: PIN (e.g. Usabilla in Orders)

- Pinning in other modules’ Podfile (demo apps): DON’T LIST because the version is forced by the podspec. LIST & PIN if the pod is explicitly used in the app too, so we don’t magically inherit it. Pinning is irrelevant but good practice.

- Pinning in main app’s Podfile: DON’T LIST because the version is forced by the podspec(s). LIST & PIN if the pod is explicitly used in the app too, so we don’t magically inherit it. Pinning is irrelevant but good practice.

External pods

Any non-Just Eat pod used by the app only.

- Examples: Instabug, GoogleAnalytics

- Pinning in other modules’ podspec: NOT APPLICABLE external pods don’t appear in any podspec, those that do are called ‘external shared’

- Pinning in other modules’ Podfile (demo apps): PIN only if the pod is used in the app code, rarely the case (e.g. Promis)

- Pinning in main app’s Podfile: PIN (e.g. Adjust)

Pinning is a good solution because it guarantees that we always build the same software regardless of new released versions of dependencies. It’s also true that pinning every dependency all the time makes the dependency graph hard to keep updated. This is why we decided to allow some flexibility in some cases.

Following is some more reasoning.

Open-source

For “open-source shared” pods, we are optimistic enough (pun intended) to tolerate the usage of the optimistic operator ~> in podspecs of other pods (i.e Orders using JustTrack) so that when a new patch version is released, the consuming pod gets it for free upon running pod update.

We have control over our code and, by respecting semantic versioning, we guarantee the consuming pod to always build. In case of new minor or major versions, we would have to update the podspecs of the consuming pods, which is appropriate.

Also, we do need to list any “open-source shared” pod in the main app’s Podfile only if directly used by the app code.

External

We don’t have control over the “external” and “external shared” pods, therefore we always pin the version in the appropriate place. New patch versions might not respect semantic versioning for real and we don’t want to pull in new code unintentionally. As a rule of thumb, we prefer injecting external pods instead of creating a dependency in the podspec.

Internal

Internal shared pods could change frequently (not as much as domain modules). For this reason, we’ve decided to relax a constraint we had and not to pin the version in the podspec. This might cause the consuming pod to break when a new version of an “internal shared” pod is released and we run pod update. This is a compromise we can tolerate. The alternative would be to pin the version causing too much work to update the podspec of the domain modules.

Continuous Integration changes

With modules in separate repositories, the CI was quite simply replicating the same steps for each module:

- install pods

- run unit tests

- run UI tests

- generated code coverage

- submit code coverage to SonarQube

Moving the modules to the monorepo meant creating smart CI pipelines that would run the same steps upon modules’ changes.

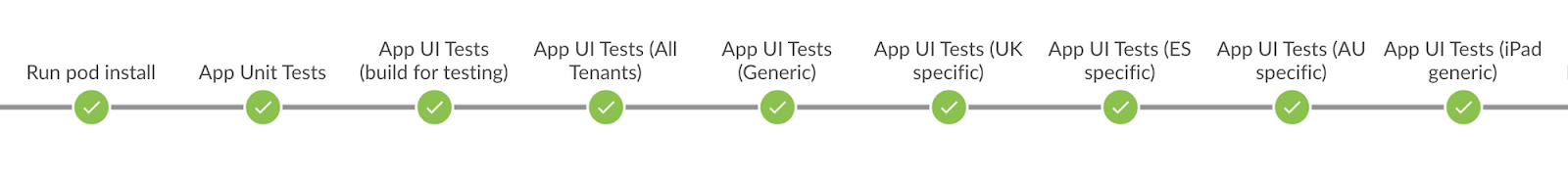

If a pull request is to change only app code, there is no need to run any step for the modules, just the usual steps for the app:

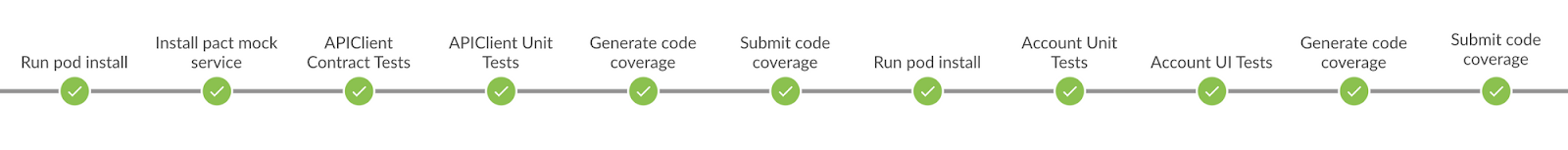

If instead, a pull request applies changes to one or more modules, we want the pipeline to first run the steps for the modules, and then the steps for the app:

Even if there are no changes in the app code, module changes could likely impact the app behaviour, so it’s important to always run the app tests.

We have achieved the above setup through constructing our Jenkins pipelines dynamically. The solution should scale when new modules are added to the monorepo and for this reason, it’s important that all modules:

- respect the same project setup (generated by CocoaPods w/ the

pod lib createcommand) - use the same naming conventions for the test schemes (

UnitTests/ContractTests/UITests) - make use of Apple Test Plans

- are in the same location (

./Modules/folder).

Following is an excerpt of the code that constructs the modules’ stages from the Jenkinsfile used for pull request jobs.

scripts = load "./Jenkins/scripts/scripts.groovy"

def modifiedModules = scripts.modifiedModulesFromReferenceBranch(env.CHANGE_TARGET)

def modulesThatNeedUpdating = scripts.modulesThatNeedUpdating(env.CHANGE_TARGET)

def modulesToRun = (modulesThatNeedUpdating + modifiedModules).unique()

sh "echo \"List of modules modified on this branch: ${modifiedModules}\""

sh "echo \"List of modules that need updating: ${modulesThatNeedUpdating}\""

sh "echo \"Pipeline will run the following modules: ${modulesToRun}\""

for (int i = 0; i < modulesToRun.size(); ++i) {

def moduleName = modulesToRun[i]

stage('Run pod install') {

sh "bundle exec fastlane pod_install module:${moduleName}"

}

def schemes = scripts.testSchemesForModule(moduleName)

schemes.each { scheme ->

switch (scheme) {

case "UnitTests":

stage("${moduleName} Unit Tests") {

sh "bundle exec fastlane module_unittests \

module_name:${moduleName} \

device:'${env.IPHONE_DEVICE}'"

}

stage("Generate ${moduleName} code coverage") {

sh "bundle exec fastlane generate_sonarqube_coverage_xml"

}

stage("Submit ${moduleName} code coverage to SonarQube") {

sh "bundle exec fastlane sonar_scanner_pull_request \

component_type:'module' \

source_branch:${env.BRANCH_NAME} \

target_branch:${env.CHANGE_TARGET} \

pull_id:${env.CHANGE_ID} \

project_key:'ios-${moduleName}' \

project_name:'iOS ${moduleName}' \

sources_path:'./Modules/${moduleName}/${moduleName}'"

}

break;

case "ContractTests":

stage('Install pact mock service') {

sh "bundle exec fastlane install_pact_mock_service"

}

stage("${moduleName} Contract Tests") {

sh "bundle exec fastlane module_contracttests \

module_name:${moduleName} \

device:'${env.IPHONE_DEVICE}'"

}

break;

case "UITests":

stage("${moduleName} UI Tests") {

sh "bundle exec fastlane module_uitests \

module_name:${moduleName} \

number_of_simulators:${env.NUMBER_OF_SIMULATORS} \

device:'${env.IPHONE_DEVICE}'"

}

break;

default: break;

}

}

}and here are the helper functions to make it all work:

def modifiedModulesFromReferenceBranch(String referenceBranch) {

def script = "git diff --name-only remotes/origin/${referenceBranch}"

def filesChanged = sh script: script, returnStdout: true

Set modulesChanged = []

filesChanged.tokenize("\n").each {

def components = it.split('/')

if (components.size() > 1 && components[0] == 'Modules') {

def module = components[1]

modulesChanged.add(module)

}

}

return modulesChanged

}

def modulesThatNeedUpdating(String referenceBranch) {

def modifiedModules = modifiedModulesFromReferenceBranch(referenceBranch)

def allModules = allMonorepoModules()

def modulesThatNeedUpdating = []

for (module in allModules) {

def podfileLockPath = "Modules/${module}/Example/Podfile.lock"

def dependencies = podfileDependencies(podfileLockPath)

def dependenciesIntersection = dependencies.intersect(modifiedModules) as TreeSet

Boolean moduleNeedsUpdating = (dependenciesIntersection.size() > 0)

if (moduleNeedsUpdating == true && modifiedModules.contains(module) == false) {

modulesThatNeedUpdating.add(module)

}

}

return modulesThatNeedUpdating

}

def podfileDependencies(String podfileLockPath) {

def dependencies = []

def fileContent = readFile(file: podfileLockPath)

fileContent.tokenize("\n").each { line ->

def lineComponents = line.split('\\(')

if (lineComponents.length > 1) {

def dependencyLineSubComponents = lineComponents[0].split('-')

if (dependencyLineSubComponents.length > 1) {

def moduleName = dependencyLineSubComponents[1].trim()

dependencies.add(moduleName)

}

}

}

return dependencies

}

def allMonorepoModules() {

def modulesList = sh script: "ls Modules", returnStdout: true

return modulesList.tokenize("\n").collect { it.trim() }

}

def testSchemesForModule(String moduleName) {

def script = "xcodebuild -project ./Modules/${moduleName}/Example/${moduleName}.xcodeproj -list"

def projectEntitites = sh script: script, returnStdout: true

def schemesPart = projectEntitites.split('Schemes:')[1]

def schemesPartLines = schemesPart.split(/\n/)

def trimmedLined = schemesPartLines.collect { it.trim() }

def filteredLines = trimmedLined.findAll { !it.allWhitespace }

def allowedSchemes = ['UnitTests', 'ContractTests', 'UITests']

def testSchemes = filteredLines.findAll { allowedSchemes.contains(it) }

return testSchemes

}You might have noticed the modulesThatNeedUpdating method in the code above. Each module comes with a demo app using the dependencies listed in its Podfile and it’s possible that other monorepo modules are listed there as development pods. This not only means that we have to run the steps for the main app, but also the steps for every module consuming modules that show changes.

For example, the Orders demo app uses APIClient, meaning that pull requests with changes in APIClient will generate pipelines including the Orders steps.

Pipeline parallelization

Something we initially thought was sensible to consider is the parallelisation of the pipelines across different nodes. We use parallelisation for the release pipelines and learned that, while it seems to be a fundamental requirement at first, it soon became apparent not to be so desirable nor truly fundamental for the pull requests pipeline.

We’ll discuss our CI setup in a separate article, but suffice to say that we have aggressively optimized it and managed to reduce the agent pool from 10 to 5, still maintaining a good level of service.

Parallelisation sensibly complicates the Jenkinsfiles and their maintainability, spreads the cost of checking out the repository across nodes and makes the logs harder to read. The main benefit would come from running the app UI tests on different nodes. In the WWDC session 413, Apple recommends generating the .xctestrun file using the build-for-testing option in xcodebuild and distribute it across the other nodes. Since our app is quite large, such file is also large and transferring it has its costs, both in time and bandwidth usage.

All things considered, we decided to keep the majority of our pipelines serial.

EDIT: In 2022 we have parallelised our PR pipeline in 4 branches:

- Validation steps (linting, Fastlane lanes tests, etc.)

- App unit tests

- App UI tests (short enough that there's no need to share

.xctestrunacross nodes) - Modified modules unit tests

- Modified modules UI tests

Conclusions

We have used the setup described in this article since mid-2020 and we are very satisfied with it. We discussed the pipeline used for the pull requests which is the most relevant one when it comes to embracing a monorepo structure. We have a few more pipelines for various use cases, such as verifying changes in release branches, keeping the code coverage metrics up-to-date with jobs running of triggers, archiving the app for internal usage and for App Store.

We hope to have given you some useful insights on how to structure a monorepo and its CI pipelines, especially if you have a structure similar to ours.